These are my notes on setting up TrueNAS from selecting the hardware to installing and configuring the software. Ypu are expected to have some IT knowledge about hardware and software as these instructions do not cover everything but will answer all of those questions that need answering.

- The TrueNAS documentation is well written and is your friend.

- HeadingsMap Firefox Add-On

- This plugin shows the tree structure of the headings in a side bar.

- It will make using this article as a reference document much easier.

Hardware

I will deal with all things hardware in this section.

My Server Hardware

This is my current configuration of my TrueNAS server and it might get updated over time.

- UPS

- APC Smart-UPS SMT1500IC 1500VA with SmartConnect

- The SmartConnect is a con. It started of free but now you need to pay a subscription so do not buy the UPS based on this feature.

- Drivers to use for Network UPS Tools (NUT)

- USB: APC ups 2 Smart-UPS (USB) USB (usbhid-ups)

- apc_modbus when available might offer more features and data, see notes later in this article.

- My APC SMT1500IC UPS Notes | QuantumWarp - These are my notes on using and configuring my APC SMT1500IC UPS.

- PC Case

- Mercury KOB 118

- Case Fans

- 2 x Noctua NF-A9 PWM 92mm Beige/Brown Fan

- These are quiet and variable.

- Power Supply

- Motherboard

- ASUS PRIME X670-P WIFI

- AM5, DDR5 PCIe 4.0 ATX

- Supports ECC RAM

- CPU

- AMD Ryzen™ 9 7900

- AM5, Zen 4, 12 Core, 24 Thread, 3.7GHz, 5.4GHz Turbo, 64MB Cache, PCIe 5.0, 65W, CPU

- Supports ECC RAM

- CPU Cooler

- AMD Wraith Prism Cooler

- RGB programmable LED with compatible motherboards.

- This came free with my Ryzen CPU.

- RAM

- 4 x 32GB Kingston ECC RAM - KSM48E40BD8KM-32HM

- 32GB DDR5 4800MT/s ECC Unbuffered DIMM CL40 2Rx8 1.1V 288-pin 16Gbit Hynix M

- Total Installed: 128GB

- Disks

- Boot

- 2 x PNY CS900 120GB SSD + 1 spare (not installed)

- Mirrored

- Long Term Storage Pool

- 4 x Western Digital WD Red Plus 3.5" NAS Hard Drive (4TB, 128MB) (WD40EFZX) + 1 spare (not installed)

- The new version WD40EFPX now has 256MB cache

- SATA

- These drives are 512e, but 4Kn drives are better

- RaidZ2 (2 drives can fail, 8TB space)

- VMs and Apps Pool

- 4 x Samsung 980 Pro PCIe 4.0 NVMe M.2 SSD (MZ-V8P1T0BW) + 1 spare (not installed)

- RaidZ2 (2 drives can fail, 2TB space)

- Backplane / Hot-Swap Bays / Bay Enclosure / Drive Bays / Caddy / Enclosure

- Additional NIC (for Virtualised pfSense Router)

- Cisco branded - Intel i350T4V2 with iSCSI NIC (UCSC-PCIE-IRJ45) Quad Port 1Gbps NIC

- Intel® Ethernet Server Adapter I350 | Intel - Drivers, Docs and Utilities

*** Do NOT use a Hardware or Software RAID with TrueNAS or ZFS, this will lead to data loss. ZFS already handles data redundency and striping across drive so a RAID is also pointless.***

ASUS PRIME X670-P WIFI (Motherboard)

- General

- ASUS PRIME X670 P : I'm not happy! - YouTube

- The PRIME X670-P is a rather good budget board, except it is not priced at a budget level. Its launching price oscillates between 280 and 300 dollars, and that is almost twice its predecessor launching price.

- A review.

- ASUS PRIME X670 P : I'm not happy! - YouTube

- Parts

- Rubber Things P/N: 13090-00141300 (contains 1 pad) (9mm x 9mm x 1mm)

- Standoffs P/N: 13020-01811600 (contains 1 screw and 1 standoff) (7.5mm)

- Standoffs P/N: 13020-01811500 (contains 2 screws and 2 standoffs) (7.5mm) - These appear to be the same as 13020-1811600

- How to turn off all lights

- [HOW TO] Turning off All motherboar lights when shut down. Keep ROG LED when PC is on | Republic of Gamers Forum - I like my room dark when I sleep. So when I build my new ASUS Maximus VII Hero computer and powered off for the first time and noticed all these lights still on! the very next thing I did was google how to turn them OFF! It took me weeks to figure out what to do and I have not seen (using Google) any one posting the complete answer. So I'll try to explain.

- [Motherboard] EZ Update - Introduction | Official Support | ASUS Global - No meta description

- Diagnostics / QLED

- This board only has Q-LED CORE (the power light flashes codes)

- [Motherboard] ASUS motherboard troubleshooting via Q-LED indicators | Official Support | ASUS Global

- How To Reset ASUS BIOS? All Possible Ways - Most ASUS motherboards offer customizing a wide range of BIOS settings to help optimize system performance. However, incorrectly modifying these advanced options can potentially lead to boot failure or system instability.

- AMD PBO (Precision Boost Overdrive)

- ASUS introduces PBO Enhancement for AMD X670 and B650 motherboards - Advanced thermal control for AMD Ryzen 7000 series processors in the ASUS ROG, TUF Gaming, ProArt and Prime AM5 line of motherboards.

- What is PBO (Precision Boost Overdrive) and Should You Enable It? - AMD has many boosting technologies for its CPUs, and PBO (Precision Boost Overdrive) is one of the most recent. What does PBO do, is it safe to enable? And most importantly: Will PBO increase your performance?

- Understanding Precision Boost Overdrive in Three E... - AMD Community - Precision Boost Overdrive (PBO) is a powerful new feature of the 2nd Gen AMD Ryzen™ Threadripper™ CPUs.1 Much like traditional overclocking, PBO is designed to improve multithreaded performance. But unlike traditional overclocking, Precision Boost Overdrive preserves all the automated intelligence built into a smart CPU like Ryzen.

- AMD CBS (Custom BIOS Settings)

- AMD Overclocking Terminology FAQ - Evil's Personal Palace - HisEvilness - Paul Ripmeester

- AMD Overclocking Terminology FAQ. This Terminology FAQ will cover some of the basics when overclocking AMD based CPU's from the Ryzen series.

- What is AMD CBS? Custom settings for your Ryzen CPU's that are provided by AMD, CBS stands for Custom BIOS Settings. Settings like ECC RAM that are not technically supported but work with Ryzen CPU's as well as other SoC domain settings.

- AMD Overclocking Terminology FAQ - Evil's Personal Palace - HisEvilness - Paul Ripmeester

- Saving BIOS Settings

- [Motherboard] How to save and load the BIOS settings? | Official Support | ASUS Global

- [SOLVED] - Best way to save BIOS settings before BIOS update? | Tom's Hardware Forum

- Q: I need to update my BIOS to fix an issue. However, I'll lose all my settings after the update. What is the best way to save BIOS settings before an update? I have a ROG STRIX Z370-H GAMING. I wish there was a way to save settings to a file and simply restore.

- A:

- Use your phone to take photos of the settings

- After updating bios it is recommended to load bios defaults from the exit menu so cmos is refreshed with new system parameters.

- Some boards do have that feature. On my MSI B450M Mortar I can save settings to a file on a USB stick, for instance. But it's next to useless as anytime I've updated BIOS and then gone to attempt reloading settings from the stick it just refuses because settings were for an earlier BIOS rev. That makes sense because I'm sure all settings are is a bitmapped series of ones and zeroes that will have no relevance from BIOS rev to rev.

- In essence, it's a broken feature. My MOBO has the same "feature." It can save settings, profiles, but they are not compatible with new revisions of the BIOS.

- I've now started keeping a record of the changes I make. Taking photos of BIOS settings displays is one way to keep a record. But I'm keeping a written log of BIOS settings changes, and annotating it with the reasons why I made each change.

- Flashing BIOS

BIOS upgrading through the BIOS GUI is not reliable

- It has failed me twice and each time I had to use the `Flash by USB` method.

- After a flash, if the power LED (usually green) is still flashing 20 mins later, something is wrong and it will not boot. You can assume the firmware failed somewhere. The PC will not even POST now.

- At the beginning of the flashing sometimes you will here a beep code, I am not sure what the purpose of this is.

Solution = Flash the firmware using the USB method below.

- [Motherboard] EZ Update - Introduction | Official Support | ASUS Global - How to update the Motherboard BIOS in Windows using the `AI Suite`

- How to Update ASUS Motherboard BIOS in Windows | ASUS SUPPORT - YouTube - ASUS EZ Update provides an easy way to update your BIOS file to the latest version.

- These 2 BIOS features make bricked PCs a thing of the past

- The old days of worrying during every BIOS update are gone.

- Modrn motherboards are almost unbrickable now, this article lists the different safeguards.

- ASUS BIOS FlashBack Tool (Emergency flash via USB / Flash Button Method)

To use BIOS FlashBack:

- Download the firmware for you motherboard paying great attention to the model number

- ie `PRIME X670-P WIFI BIOS 1654` not `PRIME X670-P BIOS 1654`

- Run the 'rename' app to rename the firmware

- This is required for the tool to recognise the firmware. I would guess this is to prevent accidental flashing.

- Place this firmware in the root of a empty FAT32 formatted USB pendrive.

- I recommend this pendrive has an access light so you can see what is going on.

- With the computer powered down, but still plugged in and the PSU still on, insert the pendrive into the correct BIOS FlashBack USB socket for your motherboard.

- Press and hold the FlashBack button for 3 flashes and then let go:

- Flashing Green LED: the firmware upgrade is active. It will carry on flashing green until the flashing is finished which will take 8 minutes max and then the light will turn off and stay off. I would leave for 10 minutes to be sure, but mine took 5 minutes. The pendrive will be accessed at regular intervals but not as much as you would think.

- Solid Green LED: The firmware flashing never started. This is probably because the firmware is the wrong one for your motherboard or the file has not been renamed. With this outcome you can always see the USB drive accessed once by the pendrives activity light (if it has one).

- RED LED: The firmware update failed during the process.

- Download the firmware for you motherboard paying great attention to the model number

- [Motherboard] How to use USB BIOS FlashBack? | Official Support | ASUS Global

- Use situation: If your Motherboard cannot be turned on or the power light is on but not displayed, you can use the USB BIOS FlashBack™ function.

- Requirements Tool: Prepare a USB flash drive with a capacity of 1GB or more. *Requires a single sector USB flash drive in FAT16 / 32 MBR format.

- [Motherboard] How to use USB BIOS FlashBack? | Official Support | ASUS USA

- Use situation: If your Motherboard cannot be turned on or the power light is on but not displayed, you can use the USB BIOS FlashBack™ function.

- Requirements Tool: Prepare a USB flash drive with a capacity of 1GB or more. *Requires a single sector USB flash drive in FAT16 / 32 MBR format.

- How long is BIOS flashback? - CompuHoy.com

- How long should BIOS update take? It should take around a minute, maybe 2 minutes. I’d say if it takes more than 5 minutes I’d be worried but I wouldn’t mess with the computer until I go over the 10 minute mark. BIOS sizes are these days 16-32 MB and the write speeds are usually 100 KB/s+ so it should take about 10s per MB or less.

- This page is loaded with ADs

- What is BIOS Flashback and How to Use it? | TechLatest - Do you have any doubts regarding BIOS Flashback? No issues, we have got your back. Follow the article till the end to clear doubts regarding BIOS Flashback.

- FIX USB BIOS Flash Button Not Working MSI ASUS ASROCK GIGABYTE - YouTube | Mike's unboxing, reviews and how to

- Make sure the USB pendrive is correctly formatted.

- Try other flash drives, it is really picky sometimes.

- The biggest problem with USB qflash or mflash or just USB BIOS flash back buttons in general is the USB stick not being read properly, this is mainly due to a few possible problems one being drive incompatibility, another being incorrect or wrong BIOS file and the other is the drive not being recognised.

- On MSI motherboards this is commonly shown by the mflash LED flashing 3 times then nothing or a solid LED, no flashing or quick flashing.

- So in this video i'll show you how to correctly prepare your USB flash drive or thumb drive so it has maximum chance of working first time!

- Help: Asus Prime X670-P WiFi won't update bios (What motherboard replacement?) | TechPowerUp Forums

- The biosrenamer is for renaming the bios to something specific that the bios flashback to read for the function the universal name is ASUS.CAP and then each board have a specific name, for mine it's PX670PW.CAP.

- Configuring the BIOS

- How To Navigate And Set Up Your ASUS BIOS Easily - Looking to setup your ASUS BIOS for the first time? Here's a detailed guide that covers both basic and advanced hardware-level settings.

- A Guide to BIOS Profiles & Settings for ASUS motherboards | Articles from UK Gaming Computers - Find out how to load a BIOS profile and more using this handy support guide.

- How to Optimize the Memory Performance by setting XMP or EXPO on ASUS Motherboard? | ASUS SUPPORT - YouTube - To boost your motherboard's memory performance and improve your gaming experience, watch this video. It shows a simple tweak that will help you enjoy smoother gameplay!

- 6 BIOS settings every new PC builder needs to know about

- We know you want to install your games, but first, you need to handle a few things in the BIOS

- XMP or EXPO for your RAM.

- BIOS POST is extremely long

- This can be a disturbing problem to occur, you think that you have broken your motherboard and CPU when you first power on the PC server on. Your PC can take up to 20 minutes to POST for tyhe first time if you have 128GB RAM installed POSTs after this usually take about 11 minutes on my system.

- Symptoms

- After building my PC it does not make any beeps or POST.

- Sometimes the power light flashes

- I can always get into the BIOS on first boot after I have wiped the BIOS.

- However after further examination, I found my motherboard just actually takes 20 minutes to POST on an initial run and up to 10 minutes on consequent runs.

- Things I tried

- Upgrading the BIOS.

- Clearing the BIOS with the jumper.

- Clearing the BIOS with the jumper and then pulling the battery out.

- Cause

- On the first boot the computer is building a memory profile or even just testing the RAM. I have 128GB RAM in so it takes a lot longer to finish what it is doing.

- Issues with the firmware

- Solution

- Wait for the computer to finish these tests, it is not broken. My PC took 18m55s to POST, so you should wait 20mins.

- Update the firmware.

- Notes

- The more RAM you have the longer POST takes.

- Even if I fix the POST time, the initial run will always generate a long POST while it builds certain memory mappings and configs in the BIOS.

- My board has Q-LED Core which uses the power light to indicate things. If the power light is flashing or on the computer is alive and you should just wait.

- Of course you have double checked all of the connections on the motherboard.

- After this initial boot the PC will boot up in a normal time (usually under a minute but might be 2-3 depending on your setup). Mine still takes about 10 minutes.

- The boot time will go back to this massive time if you alter any memory settings in the BIOS or indeed, wipe the BIOS. Upgrading the BIOS will also have this affect.

- I removed my old 4 port NIC and put a newer on back in, the server booted normally (i.e. almost instant POST) but only this first time, it went back to normal after this initial boot.

- Asus X670E boot time too long - Republic of Gamers Forum - 906825

- Q: I am have an issue where my boot up time for my new PC is very slow. i know that the first time boot up when i built the PC is long but this is getting ridiculous.

- A:

- All DDR5 systems have longer boot times than DDR4 since they have to do memory tests.

- Enable Context Restore in the DDR Settings menu of BIOS, you might have another one boot after that which is long, but subsequent boots should me much quicker, until you do a BIOS update or clear CMOS

- Context Restore retains the last successful POST. POST time depends on the memory parameters and configuration.

- It is important to note that settings pertaining to memory training should not be altered until the margin for system stability has been appropriately established.

- The disparity between what is electrically valid in terms of signal margin and what is stable within an OS can be significant depending on the platform and level of overclock applied. If we apply options such as Fast Boot and Context Restore and the signal margin for error is somewhat conditional, changes in temperature or circuit drift can impact how valid the conditions are within our defined timing window.

- Whilst POST times with certain memory configurations are long, these things are not there to irritate us and serve a valid purpose.

- Putting the system into S3 Resume is a perfectly acceptable remedy if finding POST / Boot times too long.

- B650E-F GAMING WIFI slow boot time with EXPO enabl... - Page 2 - Republic of Gamers Forum - 919610

- "Memory Context Restore"

- Solved: Crosshair X670E Hero - Long time to POST - Q-Code ... - Republic of Gamers Forum - 957938

- "Memory Context Restore"

- Advanced --> AMD CBS --> UMC Common Options --> DDR Options --> DDR Memory Features --> Memory Context Restore

- Long AM5 POST times | TechPowerUp Forums

- This is on a Gigabyte X670 Aorus Elite AX using latest BIOS and G.Skill DDR5 6000 CL30-40-40-96 (XMP kit, full part no in my system specs).

- On every boot/reboot it takes 45 seconds to complete POST and the DRAM LED on the board is lit for the vast majority of the time. This only happens when the XMP profile is enabled, it only takes 12-15 seconds w/o XMP enabled.

- Read W1zzard's review as he discusses the long boot time issue with AM5, in specific the 7950X:

- The more RAM the longer the post time. Mine is EXPO rather than XMP, but from what I've gathered across the forums, that shouldn't make a difference.

- Every single time the MB boots, it does some memory training. The first time you enable XMP, its like 2-3 minutes, every time after that is 30~ seconds. I did notice a option to disable the memory extra memory training, but it did some wacky stuff to perf. Also I see you have dual-rank memory. Those take even longer to boot I've noticed. I spend a lot of time watching the codes haha.

- Its deep in the menu for some reason. I think a earlier BIOS had it next to everything else on the Tweaker tab.

- Advanced BIOS (F2) > Settings Tab > AMD CBS > UMC Common Options > DDR Options > DDR Memory Features > Memory Context Restore

- Press Insert KEY while highlighting DDR Memory Features to add it to the Favorites Tab (F11)

- Thanks, POST now takes 21 seconds instead of 45 to complete!

- For AM5 it appears it does. The BIOS the boards initially shipped with were especially bad. Remember the AsRock memory slot stickers that made the news at launch?

- See the picture in the thread.

- 1st boot after clear CMSO (with 4 x 32GB) = 400 seconds (6min 40s)

- AMD Ryzen 9 7950X Review - Impressive 16-core Powerhouse - Value & Conclusion | TechPowerUp - Very long boot times

- During testing I didn't encounter any major bugs or issues; the whole AM5 / X670 platform works very well considering how many new features it brings; there's one big gotcha though and that's startup duration.

- When powering on for the first time after a processor install, your system will spend at least a minute with memory training at POST code 15 before the BIOS screen appears. When I first booted up my Zen 4 sample I assumed it was hung and kept resetting/clearing CMOS. After the first boot, the super long startup times improve, but even with everything setup, you'll stare at a blank screen for 30 seconds. To clarify: after a clean system shutdown, without loss of power, when you press the power button you're still looking at a black screen for 30 seconds, before the BIOS logo appears. I find that an incredibly long time, especially when you're not watching the POST code display that tells you something is happening. AMD and the motherboard manufacturers say they are working on improving this—they must. I'm having doubts that your parents would accept such an experience as an "upgrade," considering their previous computer showed something on-screen within seconds after pressing the power button.

- Update Sep 29: I just tested boot times using the newest ASUS 0703 Beta BIOS, which comes with AGESA ComboAM5PI 1.0.0.3 Patch A. No noticeable improvement in memory training times. It takes 38 seconds from pressing the power button (after a clean Windows shutdown), until there ASUS BIOS POST screen shots. After that, the usual BIOS POST stuff happens and Windows still start, which takes another 20 seconds or so.

- ASRock's X670 Motherboards Have Numerous Issues... With DRAM Stickers | TechPowerUp

- This one is likely to go down ASRock's internal history as a failure of sticking proportions. Namely, it seems that some ASRock motherboards in the newly-released AM5 X670 / X670E family carry stickers overlaid on the DDR5 slots.

- The idea was to provide users with a handy, visually informative guide on DDR5 memory stick installations and a warning on abnormally long boot times that were to be expected, according to RAM stick capacity.

- But it seems that these low-quality stickers are being torn apart as users attempt to remove them, leaving behind remnants that are extremely difficult to clean up and which can block DRAM installation entirely or partially.

CPU and Cooler

- AMD 7900 CPU

- Ryzen 9 7900x Normal Temps? - CPUs, Motherboards, and Memory - Linus Tech Tips

- Q: Hey everyone! So I recently got a r9 7900x coupled to a LIAN LI Galahad 240 AIO. It idles at 70C and when I open heavier games the temps spike to 95C and then goes to 90C constantly. I think that this is exaggerated and I will need to repaste and add a lot more paste. This got me wondering though...what's normal temps for the 7900x? I was thinking a 30-40 idle and 85 under load for an avg cpu. Is this realistic?

- A: The 7900x is actually built to run at 95c 24/7. its confirmed by AMD. Its very different compared to any other CPU architecture on the market. Ryzen 7000 CPUs are defaulted to boost to whatever cooler it has until 95⁰C. It is the setpoint.

- Ryzen 9 7900x idle temp 72-82 should i return the cpu? - AMD Community

- Hi, I just built my first PC in a long time after I switched to mac, and I chose the 7900x with the Noctua NH-U12S redux with 2 Fans. The first day it ran at around 50C but when booted to bios. When I run windows and look at the temp it always at 72-75 at idle, and when I open visual studio or even Spotify it goes up to 80 -82. I'm getting so confused because everywhere I read people say these processors run hot but at full load its normal for it to operate at 95.. (in cinebench while rendering with all cores it goes up to 92-95).

- The Maximum Operating Temperature of your CPU is 95c. Once it reaches 95c it will automatically start to throttle and slow down and if it can't it will shut down your computer to prevent damage.

- Best Thermal Paste for AMD Ryzen 7 7700X – PCTest - Thermal paste is an essential component of any computer system that helps to transfer heat from the CPU to the cooler. It is important to choose the right thermal paste for your system to ensure optimal performance. In this article, we will discuss some of the best thermal pastes for AMD Ryzen 7 7700X. We will provide you with a comprehensive guide on how to choose the right thermal paste for your system and what factors you should consider when making your decision. We will also provide you with a detailed review of each of the thermal pastes we have selected and explain why they are the best options for your system. So, whether you are building a new computer or upgrading an existing one, this article will help you make an informed decision about which thermal paste to use.

- AMD Wraith Prism Cooler

- Lights

- How to turn off all RGB lights on my 3700X PC build - NetOSec

- RGB lights in a PC are beautiful. But there are times when you don't want it to show off. Here's how I tweak my build to run in stealth mode.

- Covers how to turn off the fan lights via the USB.

- Covers other LED lighting systems aswell.

- Explained: How To Change RGB On AMD Wraith Prism Cooler? | Tech4Gamers

- We explain how to change RGB on your AMD Wraith Prism Cooler, covering the need-to-knows and also the different software you can use.

- You can only control all three RGB lights (ring, logo, and fan) on the Wraith Prism cooler if you plug in ONLY the USB cable into the cooler, not by plugging in both or just plugging in the RGB cable.

- You can use many different software to control the RGB on your AMD Wraith Prism cooler. These include Cooler Master’s Wraith Prism software, motherboard-specific software (Gigabyte RGB Fusion, MSI Mystic Light, etc.), or Razer’s Chroma software.

- How to turn off all RGB lights on my 3700X PC build - NetOSec

- Installation

- The black plastic handle should go to the top of the motherboard.

- How to install an AMD Wraith Prism or Wraith Max CPU Cooler #Shorts - YouTube - This short demonstrates how to install an AMD Wraith Prism or Wraith Max CPU Cooler onto an AMD Motherboard after the AMD CPU Chip has been installed.

- pc tips for beginners: amd am4 wraith prism rgb cooler install - YouTube - To install your amd wraith prism rgb cpu cooler is pretty simple hope you can do the same now with some guide don't be scared we all learn sometime.

- How To Install AMD Ryzen AM5 Stock Cooler Wraith Stealth Prism RGB - YouTube

- How to install AMD Ryzen AM5 Stock Cooler Wraith Stealth Prism RGBHow to install the AMD stock coolers on the new AM5 motherboard platform.

- Full tutorial.

- Removal

- How to Properly Remove an AMD Wraith Prism Cooler from an AMD CPU / Motherboard #Shorts - YouTube

- This #Shorts video demonstrates how to properly remove an AMD Wraith Prism cooler (or equivalent) from an AM4 motherboard, without accidentally pulling the CPU out of its socket.

- Run the system for about 30mins before removing the cooler to prevent any ripping.

- How to Properly Remove an AMD Wraith Prism Cooler from an AMD CPU / Motherboard #Shorts - YouTube

- Lights

Asus Hyper M.2 x16 Gen 4 Card

- Asus Hyper M.2 x16 Gen 4 Card Review & Unboxing - Creating a Raid 0 Drive x570 AMD Chipset - YouTube

- Review

- Buy bracket because it is very heavy. Will my mobo be ok because it has a steel PCIe slot? it has been for almost a year.

- [Motherboard] Compatibility of PCIE bifurcation between Hyper M.2 series Cards and Add-On Graphic Cards | Official Support | ASUS USA - Asus HYPER M.2 X16 GEN 4 CARD Hyper M.2 x16 Gen 4 Card configuration instructions.

- Rubber Things P/N: 13090-00070300

- Standoffs P/N: 13020-01811700 (contains 1 screw and 1 standoff) (2.5mm)

Asus Accessories

- Asus Standoffs

- If you need more standoffs search on ebay for `PC Screws M.2 SSD NVMe Screws Mounting Kit for ASUS Motherboard Stainless Steel`

- M.2 correct standoff / screw size for Asus Rog Zenith II Extreme Alpha? - Storage Devices - Linus Tech Tips

- Has a picture of a 13020-01811700 next to 13020-01811600.

- ASUS Rubber Pads / "M.2 rubber pad"

- There are not thermal transfer pads but are jut a pad to help push NVMe upwards for a good connection to the thermal pads on teh heatsink above. These are more useful for the longer NVMe boards ad they will tend to bow in the middle.

- M.2 rubber pad for ROG DIMM.2 - Republic of Gamers Forum - 865792

- I found the following rubber pad in the package of the Rampage VI Omega. Could you please tell me where I have to install this?

- This thread has pictures of how a single pre-installed rubber pad looks and shows you the gap and why with single sided NVMe you need to install the second pad on top.

- This setup uses 2 difference thickness pads but ASUS has changed from you swapping the pads, to you sticking another one on top of the pre-installed pads.

- M.2 rubber pad on Asus motherboard for single-sided M.2 storage device | Reddit

- Q:

- I want to insert a Samsung SSD 970 EVO Plus 1TB in a M.2 slot of the Asus ROG STRIX Z490-E GAMING motherboard.

- The motherboard comes with a "M.2 Rubber Package" and you can optionally put a "M.2 rubber pad" when installing a "single-sided M.2 storage device" according to the manual: https://i.imgur.com/4HP37NX.webp

- From my understanding, this Samsung SSD is single-sided because it has chips on one side only.

- What is this "rubber pad" for? Since it's apparently optional, what are the advantages and disadvantages of installing it? The manual doesn't even explain it, and there are 2 results about it on the whole Internet (besides the Asus manual).

- A:

- I found this thread with the same question. Now that I've actually gone through assembly, I have some more insight into this:

- My ASUS board has a metal heat sink that can screw over an M.2. On the underside of the heat sink, there's a thermal pad (which has some plastic to peel off).

- The pad on the motherboard is intended to push back against the thermal pad on the heat sink in order to minimize bending of the SSD and provide better contact with the thermal pad. I now realize that the reason ASUS only sent 1 stick-on for a single-sided SSD, is because there's only 1 metal heat sink; the board-side padding is completely unnecessary without the additional pressure of the heat sink and its thermal pad, so slots without the heat sink don't need that extra stabilization.

- So put the extra sticker with the single-sided SSD that's getting the heat sink, and don't worry about any other M.2s on the board. I left it on the default position by the CPU since it's between that and the graphics card, which makes it the most likely to have any temperature issues.

- Q:

- M.2 / NVMe Thermal Pads

- Best Thermal Pad for M.2 SSD – PCTest - Using a thermal pad on an M.2 SSD is a great way to help keep it running cool and prevent throttling. With M.2 drives becoming increasingly popular, especially in gaming PCs and laptops where heat dissipation is critical, having the right thermal pad is important. In this guide, we’ll cover the benefits of using a thermal pad with an M.2 drive, factors to consider when choosing one, and provide recommendations on the best M.2 thermal pads currently available.

Case Fans

- Noctua NF-A9 PWM Case Fan

- Noctua NF-S12A Review & Installation - Make Your Computer Quiet - YouTube - Shows you how to install with the antivibration rubbers.

- Noctua NF A9 92mm PWM Fan Installation on DELL G5 - YouTube - This shows the fan installed with the antivibration rubbers.

Hardware Selection

These links will help you find the kit that suits your needs best.

- If you are a company, buy a prebuilt system from iXSystems, do not roll your own.

- Only use CMR based hard disks when building your NAS with traditional drives.

- SSD and NVMe can be used. Not recommended for long term storage.

General

- SCALE Hardware Guide | Documentation Hub

- Describes the hardware specifications and system component recommendations for custom TrueNAS SCALE deployment.

- From repurposed systems to highly custom builds, the fundamental freedom of TrueNAS is the ability to run it on almost any x86 computer.

- This is a definite read before purchasing your hardware.

- TrueNAS Mini - Enterprise-Grade Storage Solution for Businesses

- TrueNAS Mini is a powerful, enterprise-grade storage solution for SOHO and businesses. Get more out of your storage with the TrueNAS Mini today.

- TrueNAS Minis come standard with Western Digital Red Plus hard drives, which are especially suited for NAS workloads and offer an excellent balance of reliability, performance, noise-reduction, and power efficiency.*

- Regardless of which drives you use for your system, purchase drives with traditional CMR technology and avoid those that use SMR technology.

- (Optional) Boost performance by adding a dedicated, high-performance read cache (L2ARC) or by adding a dedicated, high-performance write cache (ZIL/SLOG)

- I dont need this, but it is there if needed.

Tools

- Free RAIDZ Calculator - Caclulate ZFS RAIDZ Array Capacity and Fault Tolerance.

- Online RAIDz calculator to assist ZFS RAIDz planning. Calculates capacity, speed and fault tolerance characteristics for a RAIDZ0, RAIDZ1, and RAIDZ3 setups.

- This RAIDZ calculator computes zpool characteristics given the number of disk groups, the number of disks in the group, the disk capacity, and the array type both for groups and for combining. Supported RAIDZ levels are mirror, stripe, RAIDZ1, RAIDZ2, RAIDZ3.

Other People's Setups

- My crazy new Storage Server with TrueNAS Scale - YouTube | Christian Lempa

- In this video, I show you my new storage server that I have installed with TrueNAS Scale. We talk about the hardware parts and things you need to consider, and how I've used the software on this storage build.

- A very detailed video, watch before you purchase hardware.

- Use ECC memory

- He istalled 64GB, but he has a file cache configured.

- Dont buy a chip with IGP, they dont tend to support ECC memory.

- ZFS / TrueNAS Best Practices? - #5 by jode - Open Source & Web-Based - Level1Techs Forums - You hint at a very diverse set of storage requirements that benefit from tuning and proper storage selection. You will find a lot of passionate zfs fans because zfs allows very detailed tuning to different workloads, often even within a single storage pool. Let me start to translate your use cases into proper technical requirements for review and discussion. Then I’ll propose solutions again for discussion.

UPS

- My APC SMT1500IC UPS Notes | QuantumWarp - These are my notes on using and configuring my APC SMT1500IC UPS.

Motherboard

- Make sure it supports ECC RAM.

- Use the Motherboard I am using.

CPU and Cooler

- Make sure it supports ECC RAM.

- Use the CPU and Cooler I am using.

RAM

Use ECC RAM if you value your data

- All TrueNAS hardware from iXsystems comes with ECC RAM.

- ECC RAM - SCALE Hardware Guide | Documentation Hub

- Electrical or magnetic interference inside a computer system can cause a spontaneous flip of a single bit of RAM to the opposite state, resulting in a memory error. Memory errors can cause security vulnerabilities, crashes, transcription errors, lost transactions, and corrupted or lost data. So RAM, the temporary data storage location, is one of the most vital areas for preventing data loss.

- Error-correcting code or ECC RAM detects and corrects in-memory bit errors as they occur. If errors are severe enough to be uncorrectable, ECC memory causes the system to hang (become unresponsive) rather than continue with errored bits. For ZFS and TrueNAS, this behaviour virtually eliminates any chances that RAM errors pass to the drives to cause corruption of the ZFS pools or file errors.

- To summarize the lengthy, Internet-wide debate on whether to use error-correcting code (ECC) system memory with OpenZFS and TrueNAS: Most users strongly recommend ECC RAM as another data integrity defense.

- However:

- Some CPUs or motherboards support ECC RAM but not all

- Many TrueNAS systems operate every day without ECC RAM

- RAM of any type or grade can fail and cause data loss

- RAM failures usually occur in the first three months, so test all RAM before deployment.

- TrueNAS on system without ECC RAM vs other NAS OS | TrueNAS Community

- If you care about your data, intend for the NAS to be up 24x365, last for >4 years, then ECC is highly recommended.

- ZFS is like any other file systems, send corrupt data to the disks, and you have corruption that can't be fixed. People say "But, wait, I can FSCK my EXT3 file system". Sure you can, and it will likeky remove the corruption and any data associated with that corruption. That's data loss.

- However, with ZFS you can't "fix" a corrupt pool. It has to be rebuilt from scratch, and likely restored from backups. So, some people consider that too extreme and use ECC. Or don't use ZFS.

- All that said. ZFS does do something that other file systems don't. In addition to any redundancy, (RAID-Zx or Mirroring), ZFS stores 2 copies of metadata and 3 copies of critical metadata. That means if 1 block of metadata is both corrupt AND that ZFS can detect that corruption, (no certainty), ZFS will use another copy of metadata. Then fix the broken metadata block(s).

- OpenMediaVault vs. TrueNAS (FreeNAS) in 2023 - WunderTech

- Another highly debated discussion is the use of ECC memory with ZFS. Without diving too far into this, ECC memory detects and corrects memory errors, while non-ECC memory doesn’t. This is a huge benefit, as ECC memory shouldn’t write any errors to the disk. Many feel that this is a requirement for ZFS, and thus feel like ECC memory is a requirement for TrueNAS. I’m pointing this out because hardware options are minimal for ECC memory – at least when compared to non-ECC memory.

- The counterpoint to this is argument is that ECC memory helps all filesystems. The question you’ll need to answer is if you want to run ECC memory with TrueNAS because if you do, you’ll need to ensure that your hardware supports it.

- On a personal level, I don’t run TrueNAS without ECC memory, but that’s not to say that you must. This is a huge difference between OpenMediaVault and TrueNAS and you must consider it when comparing these NAS operating systems

- = you should run TrueNAS with ECC memory where possible

- How Much Memory Does ZFS Need and Does It Have To Be ECC? - YouTube | Lawrence Systems

- You do not need a lot of memory for ZFS but if you do use lots of memory you're going to get beeter performance out of ZFS (i.e cache)

- Using ECC memory is better but it is not a requirement. Tom uses ECC as shown on his TrueNAS servers.

- ECC vs non-ECC RAM and ZFS | TrueNAS Community

- I've seen many people unfortunately lose their zpools over this topic, so I'm going to try to provide as much detail as possible. If you don't want to read to the end then just go with ECC RAM.

- For those of you that want to understand just how destructive non-ECC RAM can be, then I'd encourage you to keep reading. Remember, ZFS itself functions entirely inside of system RAM. Normally your hardware RAID controller would do the same function as the ZFS code. And every hardware RAID controller you've ever used that has a cache has ECC cache. The simple reason: they know how important it is to not have a few bits that get stuck from trashing your entire array. The hardware RAID controller(just like ZFS) absolutely NEEDS to trust that the data in RAM is correct.

- For those that don't want to read, just understand that ECC is one of the legs on your kitchen table, and you've removed that leg because you wanted to reuse old hardware that uses non-ECC RAM. Just buy ECC RAM and trust ZFS. Bad RAM is like your computer having dementia. And just like those old folks homes, you can't go ask them what they forgot. They don't remember, and neither will your computer.

- A full write up and disccussion.

- Q re: ECC Ram | TrueNAS Community

- Q: Is it still recommended to use ECC Ram on a TrueNAS Scale build?

- A1:

- Yes. It still uses ZFS file system which benefits from it.

- A2:

- It's recommended to use ECC any time you care about your data--TrueNAS or not, CORE or SCALE, ZFS or not. Nothing's changed in this regard, nor is it likely to.

- A3:

- One thing people over look is that statistically Non-ECC memory WILL have failures. Okay, perhaps at extremely rare times. However, now that ZFS is protecting billions of petabytes, (okay I don't how much total... just guessing), their are bound to be failures from Non-ECC memory that cause data loss. Or pool loss.

- Specifically, in memory corruption of an already check-summed block, that ends up being written to disk may be found by ZFS during the next scrub. BUT, in all likely hood that data is lost permanently unless you have unrelated backups. (Backups of corrupt data, simply restores corrupt data...)

- Then their is the case of not yet check-summed block, that got corrupted. Along comes ZFS to give it a valid checksum and write it to disk. Except ZFS will never detect this as bad during a scrub unless it was metadata that is invalid, (like compression algorithm value not yet assigned), then still data loss. Potentially entire pool lost.

- This is just for ZFS data, which is most of the movement. However, their are program code and data blocks that could also be corrupted...

- Are these rare? Of course!!! But, do you want to be a statistic?

- Can I install an ECC DIMM on a Non-ECC motherboard? | Integral Memory

- Most motherboards that do not have an ECC function within the BIOS are still able to use a module with ECC, but the ECC functionality will not work.

- Keep in mind, there are some cases where the motherboard will not accept an ECC module, depending on the BIOS version.

- Trying to understand the real impact of not having ECC : truenas | Reddit

- A1:

- From everything I've read, there's no inherent reason ZFS needs ECC more than any other system, it's just that people tend to come to ZFS for the fault tolerance and correction and ECC is part of the chain that keeps things from getting corrupted. It's like saying you have the most highly rated safety certification for your car and not wearing your seatbelt - you should have a seatbelt in any car.

- A2:

- The TrueNAS forums have a good discussion thread on it, that I think you might have read, Non-ECC and ZFS Scrub? | TrueNAS Community. If not, I strongly encourage it.

- The idea is, ECC prevents ZFS from incurring bitflip during day-to-day operations. Without ECC, there's always a non-zero chance it can happen. Since ZFS relies on the validity of the checksum when a file is written, memory errors could result in a bad checksum written to disk or an incorrect comparison on a following read. Again, just a non-zero chance of one or both events occurring, not a guarantee. ZFS lacks an "fsck" or "chkdsk" function to repair files, so once a file is corrupted, ZFS uses the checksum to note the file differs from the checksum and recover it, if possible. So, in the case of a corrupted checksum and a corrupted file, ZFS could potentially modify the file even further towards complete unusability. Others can comment if there's any way to detect this, other than via a pool scrub, but I'm unaware.

- Some people say, "turn off ZFS pool scrubs, if you have no ECC RAM", but ZFS will still checksum files and compare during normal read activity. If you have ECC memory in your NAS, it effectively eliminates the chance of memory errors resulting in a bad checksum on disk or a bad comparison during read operations. That's the only way. You probably won't find many people that say, "I lost data due to the lack of ECC RAM in my TrueNAS", but anecdotal evidence from the forum posts around ZFS pool loss points in that direction.

- A3:

- A4:

- Because ZFS uses checksums a bitflip during read will result in ZFS incorrectly detecting the data as damaged and attempting to repair it. This repair will succeed unless the parity/redundancy it uses to repair it experiences the same bitflip, in which case ZFS will log an unrecoverable error. In neither case will ZFS replace the data on disk unless the bitflips coincidentally create a valid hash. The odds of this are about 1 in 1-with-80-zeroes-after-it.

- And lots more.....

- A1:

- ECC Ram with Lz4 compression. | TrueNAS Community

- Q: I'm using IronWolf 2TB x2 drives with mirror configuration to have constant backup data. To be safe from data corruption on one of those two drives, Do I have to use ECC memory? As my server I'm using HP Prodesk 600 G1 and I don't think this PC is capable of reading ECC memory.

- A: Ericloewe

- LZ4 compression is not relevant to your question and does not affect the answer.

- The answer is that if you value your data, you should take all reasonable precautions to safeguard it, and that includes ECC RAM.

- A: winnielinnie

- ECC RAM assures the data you intend to be written (as a record) is correct before being written to the storage media.

- After this point, due to checksums and redundancy, ZFS will assure the data remains correct.

- With non-ECC RAM, if the data were to be corrupted before being written to storage, ZFS will simply keep this ("incorrectly") written record integral.

- According to ZFS, everything checks out.

- ECC RAM

- Create text file with the content: "apple"

- Before writing it to storage, the file's content is actually: "apply"

- The corruption is detected before writing it as a ZFS record to storage.

- Non-ECC RAM

- Create text file with the content: "apple"

- Before writing it to storage, the file's content is actually: "apply"

- This is not caught, and you in fact write a ZFS record to storage.

- ZFS creates a checksum and uses redundancy for the file that contains: "apply"

- Running scrubs and reading the file will not report any corruption. Because the checksum matches the record.

- Your file will always "correctly" have the content: "apply"

- A: Arwen

- While memory bit flips are rarer than disk problems, without ECC memory you don't know if you have a problem during operation. (Off line / boot time memory checks can be done if you suspect a problem...)

- And to add another complication to @winnielinnie's Non-ECC RAM first post, their is a window of time with ZFS where data could be check summed while in memory, and then the data damaged by bad memory. Thus, bad data written to disk causing permanent data loss, but detectable.

- It is about risk avoidance. How much you want to avoid, and can afford to implement.

Drive Bays

- TrueNAS Enclosure (Enterprise only)

- System Settings --> Enclosure

- This gives you a visual disk Management including bay numbers. This is a very useful feature.

- View Enclosure Screen (Enterprise Only) | Documentation Hub - Provides information on the TrueNAS View Enclosure screen available only on compatible SCALE Enterprise systems.

- Some I looked at

Storage Controllers

- Don't use a RAID card for TrueNAS, use a HBA if you need extra drives.

- How to identify HDD location | TrueNAS Community

- You're using the wrong type of storage attachment. That's a RAID card, which means TrueNAS has no direct access to the disks and can't even see the serial numbers.

- You need an HBA card instead if you want to protect your data. Back it all up now and get that sorted before doing anything else.

- What's all the noise about HBA's, and why can't I use a RAID controller? | TrueNAS Community

- An HBA is a Host Bus Adapter.

- This is a controller that allows SAS and SATA devices to be attached to, and communicate directly with, a server.

- RAID controllers typically aggregate several disks into a Virtual Disk abstraction of some sort, and even in "JBOD" or "HBA mode" generally hide the physical device.

- What's all the noise about HBAs, and why can't I use a RAID controller? | TrueNAS Community - This seems to be a direct copy of the article above.

Drives

This is my TLDR:

- General

- You cannot change the Physical Sector size of any drive.

- Solid State drives do not have physical sectors as they do not have platters. The LBA is all handled internally with the Solid State drive. This means that changing a Solid State drive from 512e to 4Kn will potentially have a minimal performance increase with ZFS (ashift=12) but might be useful for NTFS whoes default cluster size is 4096B.

- HDD (SATA Spinning Disks)

- They come in a variety of Sector size configurations

- 512n (512B Logical / 512B Physical)

- 512e (512B Logical / 4096B Physical)

- The 512e drive benefits from 4096B physical sectors whilst being able to emulate a 512 Logical sector for legacy OS.

- 4096Kn (4096B Logical / 4096B Physical)

- The 4Kn drives are faster because their larger sector size required less checksum data to be stored and read (512n = 8 checksum, 4Kn = 1 checksum).

- Custom Logical

- There are very few of these disks that allow you to set custom logical sector sizes, but a quite a few that allow you to switch between 512e and 4Kn modes (usually NAS and professional drives).

- Hot-swappable drives

- SSD (SATA)

- They are Solid State

- Most if not all SSDs are 512n

- A lot quicker that Spinning Disks

- Hot-swappable drives

- SAS

- They come in Spinning Disk and Solid State.

- Because of the enviroment that these drives are going in, most of they have configurable Logical Sector sizes.

- Used mainly in Data Farms.

- The connector will allow SATA drives to be connected.

- I think SAS drives have Multi I/O unike SATA but similiar to NVMe.

- Hot-swappable drives

- NVMe

- A lot of these drives come as 512n. I have seen a few that allow you to switch from 512e to 4Kn and back and this does vary from manufacturer to manufacturer. The difference in the modes will not have a huge difference in performance.

- These drives need direct connection to the PCI Bus via PCI Lanes, usually 3 or 4.

- They can get quite hot.

- Can do multiple read and writes at the same time due to the mutliple PCI Lanes they are connected to.

- A lot quicker that SSD.

- Cannot hotswap drives.

- U.2

- This is more a connection standard rather than a new type of drive.

- I would avoid this technology not because it is bad, but because U.3 is a lot better.

- Hot-swappable drives (SATA/SAS only)

- The end points (i.e. drive bays) need to be preset to either SATA/SAS or NVMe.

- U.3 (Buy this kit when it is cheap enough)

- This is more a connection standard rather than a new type of drive.

- This is a revision of the U.2 standard and is where all drives will be moving to in the near future.

- Hot-swappable drives (SATA/SAS/NVMe)

- The same connector can accept SATA/SAS/NVMe without having to preset the drive type. This allows easy mix and matching using the same drive bays.

- Can support SAS/SATA/NVMe drives all on the same form factor and socket which means one drive bay and socket type for them all. Adpaters are easy to get.

- Will require a Tri-mode controller card.

- General

- You should use 4kn drives on ZFS as 4096 blocks are the smallest size TrueNAS will write (ashift=12).

- If your drive supports 4Kn, you should set it to this mode. It is better for performance, and if it was not, they would not of made it.

- 512e drives are ok and should be fine for most peoples how network.

- In Linux `Sata 0` is referred to as `sda`

- Error on a disk | TrueNAS Community

- There's no need for drives to be identical, or even similar, although any vdev will obviously be limited by its least performing member.

- Note, though that WD drives are merely marketed as "5400 rpm-class", whatever that means, and actually spin at 7200 rpm.

- U.2 and NVMe - To speed up the PC performance | Delock - Sopme nice diagrams and explanations.

- SAS vs SATA - Difference and Comparison | Diffen - SATA and SAS connectors are used to hook up computer components, such as hard drives or media drives, to motherboards. SAS-based hard drives are faster and more reliable than SATA-based hard drives, but SATA drives have a much larger storage capacity. Speedy, reliable SAS drives are typically used for servers while SATA drives are cheaper and used for personal computing.

- U.2, U.3, and other server NVMe drive connector types (in mid 2022) | Chris's Wiki - A general discussion about these differetn formats and their availability.

- What Drives should I to use?

- Don't use (Pen drives / Thumb Drives / USB sticks / USB hard drives) for storage or your boot drive either.

- Use CMR HDD drives, SSD, NVMe for storage and boot.

- Update: WD Red SMR Drive Compatibility with ZFS | TrueNAS Community

- Thanks to the FreeNAS community, we uncovered and reported on a ZFS compatibility issue with some capacities (6TB and under) of WD Red drives that use SMR (Shingled Magnetic Recording) technology. Most HDDs use CMR (Conventional Magnetic Recording) technology which works well with ZFS. Below is an update on the findings and some technical advice.

- WD Red TM Pro drives are CMR based and designed for higher intensity workloads. These work well with ZFS, FreeNAS, and TrueNAS.

- WD Red TM Plus is now used to identify WD drives based on CMR technology. These work well with ZFS, FreeNAS, and TrueNAS.

- WD Red TM is now being used to identify WD drives using SMR, or more specifically, DM-SMR (Device-Managed Shingled Magnetic Recording). These do not work well with ZFS and should be avoided to minimize risk.

- There is an excellent SMR Community forum post (thanks to Yorick) that identifies SMR drives from Western Digital and other vendors. The latest TrueCommand release also identifies and alerts on all WD Red DM-SMR drives.

- The new TrueNAS Minis only use WD Red Plus (CMR) HDDs ranging from 2-14TB. Western Digital’s WD Red Plus hard drives are used due to their low power/acoustic footprint and cost-effectiveness. They are also a popular choice among FreeNAS community members building systems of up to 8 drives.

- WD Red Plus is the one of the most popular drives the FreeNAS community use.

- CMR vs SMR

- List of known SMR drives | TrueNAS Community - This explains some of the differences of `SMR vs CMR` along with a list of some drives

- Device-Managed Shingled Magnetic Recording (DMSMR) - Western Digital - Find out everything you want to know about how Device-Managed SMR (DMSMR) works.

- List of known SMR drives | TrueNAS Community

- Hard drives that write data in overlapping, "shingled" tracks, have greater areal density than ones that do not. For cost and capacity reasons, manufacturers are increasingly moving to SMR, Shingled Magnetic Recording. SMR is a form of PMR (Perpendicular Magnetic Recording). The tracks are perpendicular, they are also shingled - layered - on top of each other. This table will use CMR (Conventional Magnetic Recording) to mean "PMR without the use of shingling".

- SMR allows vendors to offer higher capacity without the need to fundamentally change the underlying recording technology.

New technology such as HAMR (Heat Assisted Magnetic Recording) can be used with or without shingling. The first drives are expected in 2020, in either flavor. - SMR is well suited for high-capacity, low-cost use where writes are few and reads are many.

- SMR has worse sustained write performance than CMR, which can cause severe issues during resilver or other write-intensive operations, up to and including failure of that resilver. It is often desirable to choose a CMR drive instead. This thread attempts to pull together known SMR drives, and the sources for that information.

- There are three types of SMR:

- Drive Managed, DM-SMR, which is opaque to the OS. This means ZFS cannot "target" writes, and is the worst type for ZFS use. As a rule of thumb, avoid DM-SMR drives, unless you have a specific use case where the increased resilver time (a week or longer) is acceptable, and you know the drive will function for ZFS during resilver. See (h)

- Host Aware, HA-SMR, which is designed to give ZFS insight into the SMR process. Note that ZFS code to use HA-SMR does not appear to exist. Without that code, a HA-SMR drive behaves like a DM-SMR drive where ZFS is concerned.

- Host Managed, HM-SMR, which is not backwards compatible and requires ZFS to manage the SMR process.

- I am assuming ZFS does not currently handle HA-ZFS or HM-ZFS drives, as this would require Block Pointer Rewrite. See page 24 of (d) as well as (i) and (j).

- Western Digital implies WD Red NAS SMR drive users are responsible for overuse problems – Blocks and Files

- Has some excellent diagrams showing what is happening on the platters.

- Western Digital

- Western Digital Red, Red Plus, and Red Pro: Which NAS HDD is best? - NAS Master

- Western Digital has three families of NAS drives but which is best for your enclosure? I'm going to run you through WD Red, WD Red Plus, and WD Red Pro.

- Deals with CMR vs SMR

- Western Digital is trying to redefine the word “RPM” | Ars Technica

- The new complaint is that Western Digital calls 7200RPM drives "5400 RPM Class"—and the drives' own firmware report 5400 RPM via the SMART interface.

- 120 cycles/sec multiplied to 60 secs/min comes to 7,200 cycles/min. So in other words, these "5400 RPM class" drives really were spinning at 7,200rpm.

- WD Red Internal HDD SMR & CMR Network Attached Storage (NAS) Drive Information | Western Digital

- On WD Red NAS Drives - Western Digital Corporate Blog

- Colours explained

- Western Digital Drives: Colour Coding Explained - Dignited - Hard drive makers are continuously innovating and enhancing storage solutions. Here's everything you need to know about Western Digital drives color codes.

- What do different WD Hard Drive colors mean? - Western Digital Hard Disk Drives (WD HDD) come in blue, red, black, green, purple, gold colors. Colors explained; Comparison & Differences covered.

- Western Digital HDD Colors Explained « HDDMag - Western Digital’s HDD series has six colors, which is confusing. We'll explain the difference between all the Western Digital HDD colors.

- List of Western Digital CMR and SMR hard drives (HDD) – NAS Compares

- List of WD CMR and SMR hard drives (HDD)If you know an SMR type of drive, share it with others in a table below!

- PMR, also known as conventional magnetic recording (CMR), works by aligning the poles of the magnetic elements, which represent bits of data, perpendicularly to the surface of the disk. Magnetic tracks are written side-by-side, without overlapping. SMR offers larger drive capacity than the traditional PMR because SMR technology achieves greater areal density.

- Western Digital Red, Red Plus, and Red Pro: Which NAS HDD is best? - NAS Master

- NVMe (SGFF)/U.2/U.3 - The way forward

- General

- NVM Express - Wikipedia

- U.2, formerly known as SFF-8639, is a computer interface for connecting solid-state drives to a computer. It uses up to four PCI Express lanes. Available servers can combine up to 48 U.2 NVMe solid-state drives.[35]

- U.3 is built on the U.2 spec and uses the same SFF-8639 connector. It is a 'tri-mode' standard, combining SAS, SATA and NVMe support into a single controller. U.3 can also support hot-swap between the different drives where firmware support is available. U.3 drives are still backward compatible with U.2, but U.2 drives are not compatible with U.3 hosts

- These are TOTALLY Different - Let me Explain. (U.3 Storage Comparison) - YouTube | Linus Tech Tips

- U.3 is an interface that combines the power of NVMe, SAS, and SATA drives into one controller, but how does that work?

- Different between U.2 and U.3

- NVM Express - Wikipedia

- Adapters / Kit

- Adapter, M.2 to U.2 - M.2 PCIe NVMe SSDs - Drive Adapters and Drive Converters (U2M2E125) | StarTech.com

- M.2 to U.3 Adapter For M.2 NVMe SSDs - Drive Adapters and Drive Converters (1M25-U3-M2-ADAPTER) | Hard Drive Accessories | StarTech.com

- Adapter, U.2 to M.2 - 2.5” U.2 NVMe SSD - Drive Adapters and Drive Converters (M2E4SFF8643) | StarTech.com

- 4 solutions tested: Add 2.5" SFF NVMe (U.2) to your current system - We test four of the newest solutions to add 2.5" SFF NVMe SSDs to your current system and had many lessons learned along the way.

- Advice about NVMe U2 card / backplane | ServeTheHome Forums - Hello, I am a little bit a newbie about SAS U2 card but I am looking for a RAID controller or HBA able to support multiple U2 SSD, at least 4, but 8 will be ideal. Do you have any advice on such device?

- ICY BOX Mobile Rack for 2.5" U.2/SATA/SAS HDD/SSD LN110254 - IB-2212U2 | SCAN UK - With this mobile rack, U.2 SSDs can now be installed in addition to SATA and SAS HDDs. The great advantage of U.2 is its high compatibility with other interfaces.

- Icy Dock Rugged Full Metal 4 Bay 2.5" NVMe U.2 SSD Mobile Rack For External 5.25" Bay LN90447 - MB699VP-B | SCAN UK - ICYDOCK’s latest product for NVMe U.2 SSD brings the next level of ultra high speed storage in a compact package with the ToughArmor MB699VP-B. The ToughArmor MB699VP-B is a ruggedised full metal SSD cage with hot-swappable drive caddies, supporting up to 4x NVMe U.2 SSD in a single 5.25” device bay.To fully use the speeds of NVMe SSDs, each drive bay uses its own miniSAS HD (SFF-8643) connector, maximising NVMe U.2 SSD 's potential transfer bandwidth rate of 32Gb/s.

- MB699VP-B V3_4 Bay 2.5" U.2/U.3 NVMe SSD PCIe 4.0 Mobile Rack Enclosure for External 5.25" Drive Bay (4 x OCuLink SFF-8612 4i) | ICY DOCK - The ToughArmor MB699VP-B V3 is a Ruggedized Quad Bay Removable U.2/U.3 NVMe SSD Enclosure supporting PCIe 4.0 and fetching up to 64Gb/s data transfer rates through OCuLink (SFF-8612) interface.

- ToughArmor Series_REMOVABLE 2.5" SSD / HDD ENCLOSURES_| ICY DOCK - ICY DOCK product page overview description for SATA/SAS/NVMe rugged mobile rack enclosures.

- U.2 (SFF-8639)

- U.2 - Wikipedia

- It was developed for the enterprise market and designed to be used with new PCI Express drives along with SAS and SATA drives. It uses up to four PCI Express lanes and two SATA lanes.

- The Holy Grail, Finally Found: U.2 to PCIe4 Adapters that Work! - YouTube | Level1Techs

- Types of SSD form factors - Kingston Technology - When selecting an SSD, you must know which form factor you need. This is based on your existing hardware. Your laptop or desktop PC will have slots and connections for M.2, mSATA or SATA, and possibly more than one of these. How do you choose?

- M.2 vs U.2: a Detailed Comparison - The Infobits - Traditional hard disc drives (HDDs) have long been considered a computer system's weak point in terms of speed performance.

- U.2 - Wikipedia

- U.3 (SFF-8639 or SFF-TA-1001)

- Can be used to make Universal Drive bays.

- Micron 7400 SSD’s – Featuring U.3 the next generation NVMe interface

- U.3 is a new interface standard for 2.5’’ NVME SSD’s that is an evolution of U.2 and has been used for some time. The main benefit is that the disk backplane inside the server chassis that features U.3 interfaces can carry SATA, SAS or NVMe signal through one physical connector and one cable that is connected to a Tri-Mode controller. This results in fever connectors on the backplane and less cables inside the server which in theory means lower server cost.

- Diagrams and further explanations of the standard.

- U.2 – Still the Industry Standard in 2.5” NVMe SSDs | Dell Technologies Info Hub

- This DfD is an informative technical paper meant to educate readers about the initial intentions around the U.3 interface standard, how it proceeded to fall short upon development, and why server users may want to continue using U.2 SSDs for their server storage needs.

- U.3 has been touted as a way to enable a tri-mode backplane that will support SAS, SATA and NVMe drives to work across multiple use-cases.

- What you need to know about U.3 - Quarch Technology

- What does U.3 mean for the ever-developing data storage industry? Here's a hardware engineer's perspective on this drive host controller.

- U.3 is a ‘Tri-mode’ standard, building on the U.2 spec and using the same SFF-8639 connector. It combines SAS, SATA and NVMe support into a single controller. Where firmware support is available, U.3 can also support hot-swap between the different drives.

- With U.2, you’d need a separate connector pinout/backplane, a separate mid-plane and controller for each protocol. U.3 only requires 1 backplane, 1 mid-plane and 1 controller, supporting all these drives in the same slot. This could be a great advantage, with SAS and NVMe forecasted to increase over the coming years—and SATA to decrease (according to OpenCompute).

- Shows the pinouts of U.2 and U.3

- Evolving Storage with SFF-TA-1001 (U.3) Universal Drive Bays - StorageReview.com

- U.3 is a term that refers to compliance with the SFF-TA-1001 specification, which also requires compliance with the SFF-8639 Module specification.

- U.3 can support mixed NVMe and SAS/SATA in drive bays

- U.2 drives bays have to be preset to either NVMe or SAS/SATA

- Will require a Tri-mode controller card.

- The tri-mode controller establishes connectivity between the host server and the drive backplane, supporting SAS, SATA and NVMe storage protocols.

- General

Managing Hardware

This section deals with the times you need to interact with the hardware such as identify and swap failing disk.

UPS

- My APC SMT1500IC UPS Notes | QuantumWarp - These are my notes on using and configuring my APC SMT1500IC UPS.

Hard Disks

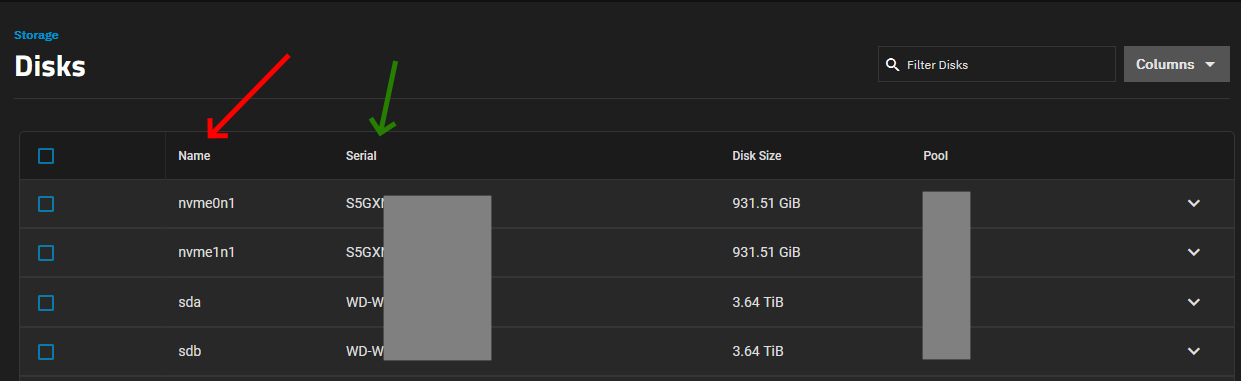

- Get boot drive serials

- Storage --> Disks

- Changing Drives

- Replacing Disks | TrueNAS Documentation Hub - Provides disk replacement instructions that includes taking a failed disk offline and replacing a disk in an existing VDEV. It automatically triggers a pool resilver during the replacement process.

- How To Replace A Failed Drive in TrueNAS Core or Scale - YouTube | Lawrence Systems

- TrueNAS 12: Replacing Failed Drives - YouTube | Lawrence Systems

- Worth noting: if you replace all disks progressively with larger disks, you can expand the array to the extra space once the array is stable on the larger disks.

- Maintenance

- Intermittent SMART errors? - #9 by joeschmuck - TrueNAS General - TrueNAS Community Forums

- If you cannot pass a SMART long test, it is time to replace the drive, and a short test is barely a small portion of the long test. Don’t wait on any other values, they do not matter. A failure of a Short or Long test is solid proof the drive is failing.

- I always recommend a daily SMART short test and a weekly SMART long test, with some exceptions such as if you have a high drive count (50 or 200 for example) then you may want to perform a monthly long test and spread the drives out across that month. The point is to run a long test periodically. You may have significantly more errors than you know.

- Intermittent SMART errors? - #9 by joeschmuck - TrueNAS General - TrueNAS Community Forums

- Testing / S.M.A.R.T

- Hard Drive Burn-in Testing | TrueNAS Community - For somebody (such as myself) looking for a single cohesive guide to burn-in testing, I figured it'd be nice to have all of the info in one place to just follow, with relevant commands. So, having worked my way through reading around and doing my own testing, here's a little more n00b-friendly guide, written by a n00b.

- Managing S.M.A.R.T. Tests | Documentation Hub - Provides instructions on running S.M.A.R.T. tests manually or automatically, using Shell to view the list of tests, and configuring the S.M.A.R.T. test service.

- Manual S.M.A.R.T Test

- Storage --> Disks --> select a disk --> Manual Test: (LONG|SHORT|CONVEYANCE|OFFLINE)

- When you start a manual test, the reponse might take a moment.

- Not all drives support ‘Conveyance Self-test’.

- If your RAID card is not a modern one, it might not pass the tests correctly to the drive (also ypu should not use a RAID card).

- When you run a long test, make a note of the expected finish time as it could be a while before you see the `Manual Test Summary`:

Expected Finished Time: sdb: 2022-11-07 19:32:45 sdc: 2022-11-07 19:47:45 sdd: 2022-11-07 19:37:45 sde: 2022-11-07 20:02:45

You can monitor the progress and the fact the drive is working by clicking on the task manager icon (top right, looks like a clipboard)

- Test disk read/write speed

- These are just a collection of DD commands people have used.

- Test disk read/write speed | TrueNAS Community - Hi, is there any way to test the read/write speed of individual disks.

- Testing zpool IO performance | TrueNAS Community

- truenas - How to correctly benchmark sequential read speeds on 2.5" hard drive with fio on FreeBSD? - Server Fault

- These are just a collection of DD commands people have used.

- Quick question about HDD testing and SMART conveyance test | TrueNAS Community

- Q: I have a 3 TB SATA HDD that was considered "bad" but I have reasons to believe that it was the controller card of the computer it came from that was bad.

- If you look at the smartctl -a data on your disk it tells you exactly how many minutes it takes to complete a test. Typical speeds are 6-9 hours for 3-4TB drives.

- Conveyance is wholly inadequate for your needs.

- I'd consider your disk good only if all smart data on the disk is good, badblocks for a few passes finds no problems, and a long test finishes without errors.

- How to View SMART Results in TrueNAS in 2023 - WunderTech - This tutorial looks at how to view SMART results in TrueNAS. There are also instructions how to set up SMART Tests and Email alerts!

- SOLVED - How to Troubleshoot SMART Errors | TrueNAS Community

sudo smartctl -a /dev/sda - This gives a full smart read out sudo smartctl -a /dev/sda -x - This gives a full smart read out with even more info

- How to identify if HDD is going to die or it's cable is faulty? | Tom's Hardware Forum

- I connected another SATA cable available in the PC case and run Seatools for diagnostic and now it shows that everything is OK! And everything works smoothly as well!

- What is Raw Read Error Rate of a Hard Drive and How to Use It - The Raw Read Error Rate is just one of many important S.M.A.R.T. data values that you should pay attention to. Learn more about it here.

- Type = (Pre-fail|Old_age) = these are the types of threshold, not an indicator.

- smart - S.M.A.R.T attribute saying FAILING_NOW - Server Fault

- The answer is inside smartctl man page:

- If the Attribute's current Normalized value is less than or equal to the threshold value, then the "WHEN_FAILED" column will display "FAILING_NOW". If not, but the worst recorded value is less than or equal to the threshold value, then this column will display "In_the_past"

- In short, your

VALUEcolumn has not recovered to a value above the threshold. Maybe your disk is really failing now (and each reboot cause some CRC error) or the disk firmware treats this kind of error as permanent and will not restore the instantaneous value to 0.

- The answer is inside smartctl man page:

- smartctl(8) - Linux man page

- smartctl controls the Self-Monitoring, Analysis and Reporting Technology (SMART) system built into many ATA-3 and later ATA, IDE and SCSI-3 hard drives.

- The results of this automatic or immediate offline testing (data collection) are reflected in the values of the SMART Attributes. Thus, if problems or errors are detected, the values of these Attributes will go below their failure thresholds; some types of errors may also appear in the SMART error log. These are visible with the '-A' and '-l error' options respectively.

- Identify Drives

- Power down the TrueNAS and physically read the serials on the drives before powering backup again.

- Drive identification in TrueNAS is done by drive serials.

- Linux drive and partition names

- The Linux drive mount names (eg sda, sdb, sdX) are not bonded to the SATA port or drive so can change. These values are based on the load order of the drives and nothing else and therefor cannot be used for drive identification.

- C.4. Device Names in Linux - Linux disks and partition names may be different from other operating systems. You need to know the names that Linux uses when you create and mount partitions. Here's the basic naming scheme:

- Names for ATA and SATA disks in Linux - Unix & Linux Stack Exchange - Assume that we have two disks, one master SATA and one master ATA. How will they show up in /dev?

- How to match ata4.00 to the apropriate /dev/sdX or actual physical disk? - Ask Ubuntu

- Some of the code mentioned

dmesg | grep ata egrep "^[0-9]{1,}" /sys/class/scsi_host/host*/unique_id $ ls -l /sys/block/sd*

- Some of the code mentioned

- linux - Mapping ata device number to logical device name - Super User

- I'm getting kernel messages about 'ata3'. How do I figure out what device (/dev/sd_) that corresponds to?

ls -l /sys/block/sd*

- I'm getting kernel messages about 'ata3'. How do I figure out what device (/dev/sd_) that corresponds to?

- SOLVED - how to find physical hard disk | TrueNAS Community

- Q: If it is reported that sda S4D0GVF2 is broken, how to know which physical hard disk it corresponds to.

- A:

- Serial number is marked on physical disk. I usually have a table with all serial numbers for each disk position, so is easy find the broken disk.

- If you have drive activity LED's, you can generate artificial activity. Press CTRL + C to stop it when you're done.

dd if=/dev/sda of=/dev/null bs=1M count=5000

- Use the 'Description`field in the GUI to record the location of the disk.

- Misc

- SOLVED - disk identification | TrueNAS Community

- Q: This might sound funny, but if you have 5 disks in a raid, how do you identify the faulty drive (physically) in your NAS box?

- A: This article goes through how to identify the disk with no knowledge of the arrangement. An excellent help me now guide.

- "This is a NAS data disk and cannot boot system" Error Message - Gillware - Your NAS won't boot and you've received an error message which says "This is a NAS data disk and cannot boot system." Here's what you can do to fix that.

- SOLVED - disk identification | TrueNAS Community

- Troubleshooting

- Hard Drive Troubleshooting Guide (All Versions of FreeNAS) | TrueNAS Community

- This guide covers the most routine single hard drive failures that are encountered and is not meant to cover every situation, specifically we will check to see if you have a physical drive failure or a communications error.

- From both the GUI and CLI

- NVME drive in a PCIe card not showing

- The PCIx16 slot needs to support PCIe bifurcation and be enabled.

- NVME PCIE Expansion Card Not Showing Drives - Troubleshooting - Linus Tech Tips

- Q:

- So, I bought the following product: Asus HYPER M.2 X16 GEN 4 CARD Hyper M.2 x16 Gen 4 Card (PCIe 4.0/3.0)

- Because I have, or plan to have 6 NVME drives (currently waiting for my WDBlack SN850 2TB to come in).

- I know the expansion card is working, because it's where my boot drive is, but the other three drives on the card are not being detected (1 formatted and 2 unformatted). They don't even show up on Disk Management.

- A:

- These cards require your motherboard to have PCIe bifurcation, which not all support. What if your motherboard model? Also, to use all the drives, it needs to be in a fully-connected x16 slot (not just physically, all the pins need to be there too).

- To get all 4 to work, you'd need to put it in the top slot and have the GPU in the bottom (not at all recommended). Those Hyper cards were designed for HEDT platforms with multiple x16 (electrical) slots. The standard consumer platforms don't have enough PCIe lanes for all the NVMe drives you want to install.

- Configure this slot to be in NVMe r=RAID mode. This only changes the birfication, it does not enable NVMe RIAD, that is elsewhere.

- Q:

- [SOLVED] - How to set 2 SSD in Asus HYPER M.2 X16 CARD V2 | Tom's Hardware Forum

- Had to turn on raid mode on NVMe is drives settings and change PCIeX16_1 to _2.

- Also had to swap drives in the adapter to slot 1&2.

- [Motherboard] Compatibility of PCIE bifurcation between Hyper M.2 series Cards and Add-On Graphic Cards | Official Support | ASUS USA - Asus HYPER M.2 X16 GEN 4 CARD Hyper M.2 x16 Gen 4 Card configuration instructions.

- [SOLVED] ASUS NVMe PCIe card not showing drives - Motherboards - Level1Techs Forums

- Q: In TrueNAS 13, the drives for the ASUS Hyper M.2 x16 gen 4 9 card aren’t showing up or the drives are not.

- A:

- Did you configure bifurcation in BIOS?

Advanced --> Chipset --> PCIE Link Width should be x4x4x4x4 - Confirmed, it’s working after enabling 4x4x4x4x bifurcation. Never seen this on my high-end gamer motherboards, but maybe I just passed it by.

- It’s required for any system to use a card like this, though it may be called something else on gaming boards — ASUS likes to refer to it as “PCIe RAID”.